Transfer learning is a powerful technique that has been revolutionizing the field of machine learning by enabling models to leverage knowledge gained from one task and apply it to a different but related task. By transferring learned features or weights from pre-trained models, developers can significantly reduce training time and data requirements, leading to faster and more efficient model development. In this blog post, we will explore how transfer learning drives innovation in machine learning and discuss its impact on various industries.

Key Takeaways:

- Efficiency: Transfer learning allows models to leverage knowledge gained from one task to another, reducing the need for extensive training on new datasets.

- Generalization: By transferring knowledge from one domain to another, transfer learning enables models to generalize better and perform well on tasks with limited labeled data.

- Rapid Development: Transfer learning accelerates the development of new applications and innovations in machine learning by speeding up the training process and improving the overall performance of models.

Understanding Transfer Learning

Definition and Principles

The concept of transfer learning involves leveraging knowledge gained while solving one problem and applying it to a different but related problem. This approach is based on the idea that models trained on one task can be useful in solving a different task, saving time and resources required to train a model from scratch.

Transfer learning is guided by two key principles: the assumption that the knowledge gained from the source task is relevant to the target task and that the amount of available labeled data for the target task is limited. By transferring knowledge from a source domain to a target domain, machine learning models can generalize better and achieve improved performance.

Types of Transfer Learning

There are mainly three types of transfer learning: inductive transfer, transductive transfer, and unsupervised transfer. In inductive transfer, the source and target domains are the same, but the tasks are different. Transductive transfer involves different source and target domains with the same task. Unsupervised transfer, on the other hand, deals with different source and target domains with different tasks.

- Learning can be derived from a source to a target domain when there is a wealth of labeled data available for the source task but limited labeled data for the target task.

- Assume that the features learned from the source domain are generalizable and can be fine-tuned for the target domain.

Another Perspective on Types of Transfer Learning

In addition to the three main types mentioned earlier, another way to categorize transfer learning is by the relationship between the source and target domains. This categorization includes domains that are related, unrelated, or partially related. Models can benefit from transfer learning when dealing with related domains as there is more overlap in the learned features.

- Transfer learning can be particularly effective when the source and target domains are partially related, as some features can still be transferred and adapted to improve model performance.

- Assume that leveraging transfer learning in scenarios where domains are partially related can lead to quicker convergence and better overall performance.

How Transfer Learning Drives Innovation

Improving Model Accuracy

If you are looking to enhance the accuracy of your machine learning models, transfer learning can be a powerful tool. By leveraging pre-trained models and fine-tuning them on your specific dataset, you can achieve improved performance without the need to start from scratch. This approach is especially valuable when working with limited data, as it allows you to benefit from the knowledge stored in the pre-trained model.

Reducing Training Time and Cost

With transfer learning, you can significantly reduce the time and cost required to train a machine learning model. Instead of training a model from scratch, which can be computationally expensive and time-consuming, you can start with a pre-trained model and fine-tune it on your data. This process requires less data and fewer computational resources, making it a more efficient solution for many machine learning tasks.

Reducing the training time and cost associated with developing machine learning models can open up new opportunities for businesses and researchers. By streamlining the model development process, transfer learning enables faster experimentation and iteration, ultimately leading to quicker innovation and deployment of machine learning solutions.

Enhancing Generalizability

Cost is a key factor in the deployment and maintenance of machine learning models. Transfer learning plays a crucial role in enhancing the generalizability of models by allowing them to perform well on new, unseen data. By leveraging knowledge from pre-trained models, transfer learning helps models adapt to different tasks and datasets, making them more versatile and robust in various real-world applications.

Training machine learning models on diverse datasets using transfer learning can improve their ability to generalize to new data, leading to more reliable and consistent performance. This enhanced generalizability is crucial for building trustworthy machine learning systems that can effectively handle a wide range of real-world scenarios.

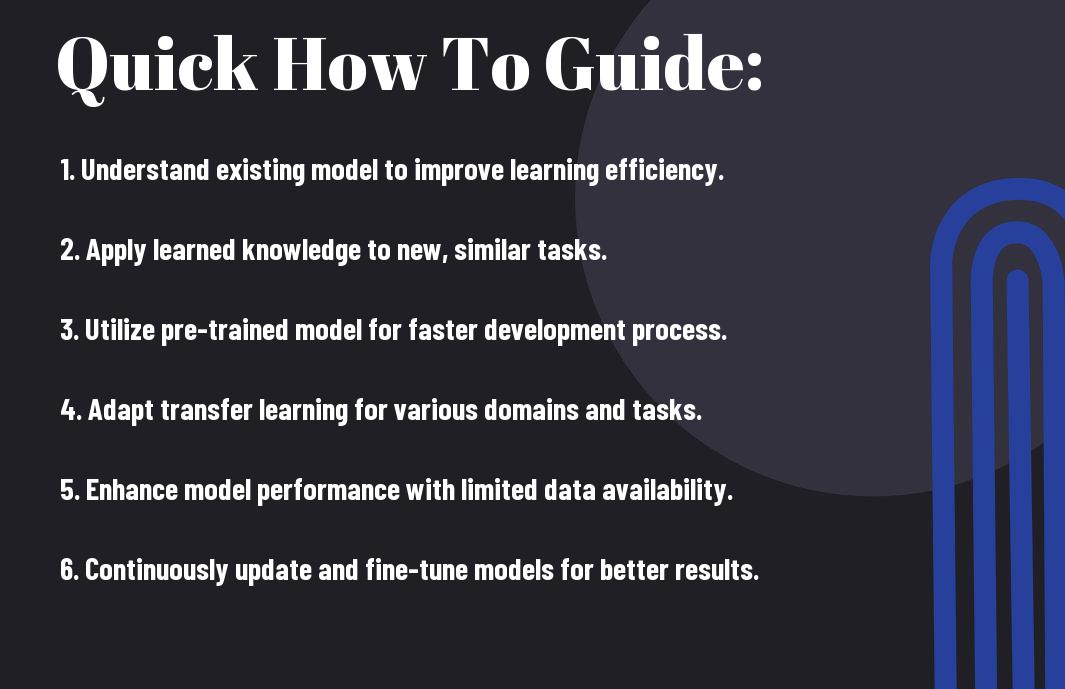

Tips for Implementing Transfer Learning

Once again, transfer learning provides a powerful tool for driving innovation in machine learning. To successfully implement transfer learning in your projects, consider the following tips:

- Choosing the Right Pre-Trained Model

- Fine-Tuning vs. Feature Extraction

- Dealing with Overfitting and Underfitting

Choosing the Right Pre-Trained Model

Even with the abundance of pre-trained models available, selecting the right one for your specific task is crucial. Consider factors such as the similarity of the pre-trained model’s domain to your target domain, the size of the pre-trained model, and the computational resources required for fine-tuning.

Fine-Tuning vs. Feature Extraction

Extraction

Fine-tuning involves updating the weights of the pre-trained model during training on the new dataset, allowing the model to learn task-specific patterns. On the other hand, feature extraction involves using the pre-trained model as a fixed feature extractor and training a new classifier on top of the extracted features. The choice between fine-tuning and feature extraction depends on the size of your dataset, the similarity of the tasks, and the computational resources available.

Dealing with Overfitting and Underfitting

Underfitting

Implementing techniques such as data augmentation, dropout layers, and early stopping can help prevent overfitting and underfitting when using transfer learning. Regularization techniques and monitoring model performance on a validation set are also vital for achieving optimal results.

Factors Affecting Transfer Learning Success

- Data Quality and Quantity

- Model Complexity and Capacity

- Domain Similarity and Shift

Data Quality and Quantity

Quality and quantity of data are crucial factors that influence the success of transfer learning. In transfer learning, the model learns from the patterns in the source domain data and applies them to the target domain. If the source data is of low quality or insufficient quantity, it can negatively impact the model’s ability to generalize to the new domain effectively.

Model Complexity and Capacity

Assuming the model’s complexity and capacity are also key considerations in transfer learning. A complex model with high capacity may perform well on the source domain but struggle to adapt to the target domain if it is too rigid or overfit. On the other hand, a model with low complexity may fail to capture the underlying patterns in the data, limiting its transferability.

Perceiving the balance between complexity and capacity is vital in transfer learning. Finding the right level of model complexity that allows for effective transfer of knowledge while avoiding overfitting or underfitting is crucial for success.

Domain Similarity and Shift

Assuming the similarity between the source and target domains, as well as any domain shift present, significantly impact transfer learning success. If the domains are vastly different or if there is a shift in the data distribution between the two domains, the transfer of knowledge may be challenging.

Complexity arises when dealing with domain shifts, as the model needs to adapt to new patterns and variations present in the target domain while leveraging knowledge from the source domain. Understanding the domain relationships and implementing strategies to address domain shifts are vital for successful transfer learning outcomes.

Real-World Applications of Transfer Learning

Computer Vision and Image Recognition

After being trained on massive datasets like ImageNet, models such as ResNet and Inception have revolutionized the field of computer vision. By leveraging transfer learning, these pre-trained models can be fine-tuned for specific tasks such as object detection, facial recognition, and image classification with significantly less data and computational resources.

There’s a wide range of applications for transfer learning in computer vision, from self-driving cars to medical image analysis. The ability to transfer knowledge from one task to another has accelerated the development of innovative solutions across various industries.

Natural Language Processing and Text Analysis

Some of the most common uses of transfer learning in natural language processing include sentiment analysis, text classification, and language translation. Models like BERT and GPT have been pre-trained on massive text corpora and can be fine-tuned for specific NLP tasks, achieving state-of-the-art results with minimal data and training time.

With the rise of chatbots, virtual assistants, and automated content generation, transfer learning has played a pivotal role in advancing the capabilities of NLP systems. By transferring knowledge from general language understanding to domain-specific tasks, these systems can effectively interpret and generate human language.

Speech Recognition and Audio Processing

If you’ve ever used speech recognition software like Siri or Google Assistant, you’ve experienced the power of transfer learning in audio processing. Pre-trained models like DeepSpeech have been fine-tuned on speech datasets to accurately transcribe spoken language, enabling seamless interaction with electronic devices.

It’s fascinating to see how transfer learning has transformed audio processing, enabling applications like voice-controlled devices, automated transcription services, and speech-to-text applications. By leveraging knowledge from vast audio datasets, these models can understand and interpret human speech with impressive accuracy and efficiency.

Overcoming Challenges in Transfer Learning

Handling Imbalanced Datasets

All machine learning models are susceptible to biases when trained on imbalanced datasets, where one class is heavily outweighed by another. Transfer learning is no exception to this challenge. An imbalanced dataset can lead to poor performance of the model, as it may end up favoring the majority class and ignoring the minority class. This can be particularly problematic when transferring knowledge from a pre-trained model to a new task with imbalanced data distributions.

Addressing Catastrophic Forgetting

Challenges in transfer learning include addressing catastrophic forgetting, where a model trained on one task forgets how to perform a previous task when learning a new task. This phenomenon can occur when the model fine-tunes its parameters on the new task, leading to the loss of previously learned knowledge. To overcome catastrophic forgetting, techniques such as regularization and rehearsal can be employed to retain important information while adapting to new tasks.

Addressing catastrophic forgetting is crucial in transfer learning, especially when the model needs to continually adapt to new data and tasks without completely erasing previously acquired knowledge.

Ensuring Explainability and Transparency

If transfer learning is to be widely adopted in various industries, ensuring explainability and transparency in the model’s decision-making process is crucial. When transferring knowledge from a pre-trained model to a new task, it can be challenging to interpret how the model makes decisions, especially if the model is complex or has multiple layers. This lack of transparency can be a hurdle in gaining trust and acceptance of the model’s predictions.

Ensuring explainability and transparency in transfer learning involves techniques such as feature visualization, model interpretability methods, and attention mechanisms. These methods help to provide insights into how the model processes information and makes predictions, making it easier for stakeholders to understand and trust the model’s decisions.

Datasets

Imbalanced datasets can pose a significant challenge in transfer learning, as they can lead to biases and poor generalization of the model. Techniques such as oversampling, undersampling, or using synthetic data generation methods can help balance the dataset and improve the model’s performance on minority classes.

Conclusion

Summing up, transfer learning plays a crucial role in driving innovation in machine learning. By leveraging knowledge learned from one task to improve learning and performance on another related task, transfer learning enables models to generalize better and make better predictions. This approach significantly reduces the need for vast amounts of labeled data and computational resources, making it more efficient and cost-effective for researchers and developers to create cutting-edge machine learning solutions.

Through transfer learning, researchers can tackle more complex and challenging problems, make advancements in various fields such as healthcare, finance, and robotics, and push the boundaries of what is possible with machine learning. As transfer learning continues to evolve and improve, it will undoubtedly contribute to the development of more intelligent and versatile models that can drive further innovation and bring about real-world applications that benefit society as a whole.

FAQ

Q: What is transfer learning in machine learning?

A: Transfer learning is a machine learning technique where a model developed for a specific task is reused as the starting point for a model on a second task.

Q: How does transfer learning drive innovation in machine learning?

A: Transfer learning accelerates the learning process by leveraging knowledge from one domain to another, reducing the need for extensive labeled data and computing resources.

Q: What are the benefits of using transfer learning in machine learning?

A: Transfer learning enables faster model training, better performance on tasks with limited data, and the ability to build more complex models by fine-tuning existing ones.

Q: When is transfer learning beneficial in machine learning projects?

A: Transfer learning is beneficial when the target task has limited data, the source and target tasks have some similarities, and when computational resources are constrained.

Q: What are some popular applications of transfer learning in machine learning?

A: Transfer learning is widely used in computer vision tasks such as image classification, object detection, and image generation, as well as in natural language processing tasks like text classification, language translation, and sentiment analysis.