Most machine learning algorithms are susceptible to bias, resulting in unfair outcomes that disproportionately affect certain groups. In this blog post, we will explore the concept of adversarial training as a method to mitigate bias in machine learning models. By understanding how adversarial training works and implementing it effectively, we can work towards creating more equitable and unbiased AI systems.

Key Takeaways:

- Adversarial training can help mitigate bias in machine learning models: Adversarial training is a technique that introduces bias intentionally during the training process to make the model more robust to biases in the dataset.

- Preventing bias in machine learning models is necessary: Bias in machine learning models can lead to unfair outcomes and discrimination, making it crucial to address and mitigate bias in these models.

- Adversarial training is not a panacea for eliminating bias: While adversarial training can help reduce bias in machine learning models, it is not a complete solution. Other measures and techniques are also required to ensure fairness and reduce bias in machine learning systems.

Understanding Bias in Machine Learning Models

A crucial issue that researchers and developers grapple with in machine learning is bias. Bias in machine learning models refers to the errors that result from erroneous assumptions in the learning algorithm. These biases can lead to skewed predictions and harmful impacts, particularly when it comes to decision-making processes based on these models.

Types of Bias in Machine Learning

There are various types of bias that can manifest in machine learning models. Some common forms of bias include:

|

|

These biases can arise at different stages of the machine learning process and can have significant implications for the accuracy and fairness of the models. Understanding and addressing these biases are crucial steps towards developing more reliable and equitable machine learning systems.

Though bias in machine learning models is a complex and multifaceted issue, efforts to mitigate and prevent bias are crucial for ensuring that AI technologies are trustworthy and beneficial for all users.

Sources of Bias in Machine Learning

To comprehend the sources of bias in machine learning models, developers and researchers must look at various factors that can contribute to bias. These sources can originate from the data used to train the models, the design of the algorithms, or the interpretation of the results.

Plus, societal biases and human prejudices can also seep into the machine learning process, leading to biased outcomes. Recognizing and addressing these sources of bias are critical for creating machine learning models that are fair and unbiased.

Consequences of Bias in Machine Learning

Clearly, the consequences of bias in machine learning models can be far-reaching. Biased models can perpetuate and even exacerbate inequalities, reinforce stereotypes, and result in unfair treatment of individuals. Moreover, biased AI systems can undermine trust in technology and hinder the potential benefits that AI can offer.

Bias in machine learning must be actively identified, evaluated, and mitigated to ensure that AI technologies are ethical, reliable, and inclusive. By addressing bias, developers and researchers can move towards creating machine learning models that are more accurate, transparent, and equitable for all users.

What is Adversarial Training?

Definition and Purpose of Adversarial Training

If you’ve been following the latest developments in machine learning, you’ve likely heard about adversarial training. Adversarial training is a technique used to improve the robustness and generalization of machine learning models by incorporating adversarial examples into the training data.

The purpose of adversarial training is to make machine learning models more resilient to adversarial attacks, which are carefully crafted inputs designed to fool the model into making incorrect predictions. By exposing the model to these adversarial examples during training, the hope is that it will learn to generalize better and make more accurate predictions on unseen data.

How Adversarial Training Works

Training a model using adversarial training involves two main steps: generating adversarial examples and updating the model parameters using these examples. During the training process, the model is simultaneously trained on regular examples and their corresponding adversarial counterparts.

By iterating this process multiple times, the model learns to make more robust predictions by adjusting its parameters to account for possible perturbations in the input data.

Benefits of Adversarial Training

Even though adversarial training can be computationally expensive and time-consuming, the benefits are significant. One of the main advantages of adversarial training is its ability to improve the model’s robustness to adversarial attacks without sacrificing overall performance on clean data.

Adversarial training can also help uncover vulnerabilities in the model and provide insights into potential weaknesses that can be addressed to enhance its overall performance and reliability.

How to Prevent Bias with Adversarial Training

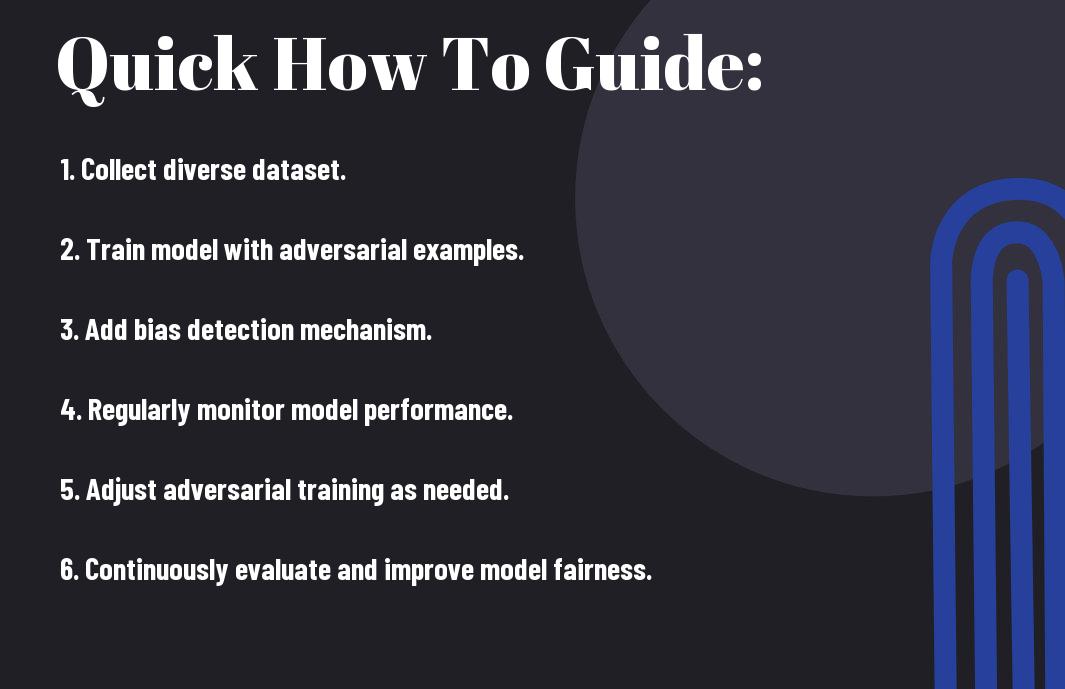

Despite the potential of adversarial training to mitigate bias in machine learning models, there are certain considerations and best practices that can enhance its effectiveness. Here are some tips to help implement adversarial training in a way that addresses bias:

Tips for Implementing Adversarial Training

- Start with a diverse and representative dataset to ensure the adversarial training captures a wide range of potential biases.

- Regularly monitor and evaluate the performance of the model post-adversarial training to assess its effectiveness in reducing bias.

- Consider the trade-off between bias reduction and overall model performance to strike a balance that meets the specific needs of the application.

The success of adversarial training in addressing bias hinges on thoughtful implementation and monitoring throughout the process. The effectiveness of this technique relies heavily on the quality of the dataset and the careful calibration of the adversarial training process.

Factors to Consider When Using Adversarial Training

- Adversarial training may introduce additional computational complexity and training time due to the generation of adversarial examples.

Adversarial training introduces a layer of complexity to the model training process, which can impact the computational resources and time required for the training process. While the benefits in terms of bias reduction can be significant, the additional overhead should be taken into consideration when planning and resource allocation.

Knowing when to apply adversarial training and how to tune its parameters are crucial factors in effectively leveraging this technique to combat bias in machine learning models. Consideration of these aspects can help ensure that adversarial training is integrated seamlessly into the machine learning pipeline for optimal results.

Best Practices for Adversarial Training

- Prevent overfitting by tuning the strength of the adversarial perturbations and regularization techniques.

It is important to strike a balance between the strength of adversarial perturbations and regularization techniques to prevent overfitting while effectively reducing bias in the model. Regular experimentation and fine-tuning are key to optimizing the adversarial training process for the specific dataset and use case.

Adversarial Training Techniques for Bias Prevention

Data Augmentation Techniques

Now, one way to prevent bias in machine learning models is through data augmentation techniques. An important aspect of data augmentation is to ensure that the training data is representative of the entire population. This can help in reducing biases that may exist in the dataset and create a more diverse and balanced training set.

Adversarial Example Generation

Generation. Adversarial example generation is another technique that can be used to prevent bias in machine learning models. Adversarial examples are input data that are intentionally modified in order to cause machine learning models to make mistakes. By incorporating adversarial examples into the training data, models can learn to be more robust and less susceptible to biases.

Adversarial training involves generating adversarial examples during the training process and using them to improve the model’s performance. This can help the model to better generalize to unseen data and reduce the impact of biases that may exist in the training set.

Ensemble Methods for Adversarial Training

Some ensemble methods can also be employed for adversarial training to prevent bias in machine learning models. By training multiple models with different initializations or architectures, the ensemble can provide a more robust defense against adversarial attacks and biases. Combining the predictions of multiple models can help in making more accurate predictions and reducing the impact of biases present in individual models.

Training. By employing ensemble methods for adversarial training, machine learning models can be better equipped to handle adversarial attacks and prevent biases from affecting their predictions. This approach can improve the overall robustness and fairness of machine learning models in various applications.

Evaluating the Effectiveness of Adversarial Training

Metrics for Measuring Bias in Machine Learning Models

While the concept of bias in machine learning models is complex and multifaceted, there are several metrics commonly used to measure bias. These metrics include disparate impact, disparate mistreatment, equal opportunity difference, and equalized odds difference. Disparate impact measures the difference in outcomes for different groups, while disparate mistreatment evaluates the errors made by a model for each group. Equal opportunity difference looks at the true positive rate difference between groups, and equalized odds difference measures the true positive and false positive rate differences.

How to Evaluate the Success of Adversarial Training

You can evaluate the success of adversarial training by testing the model’s performance on both the original task it was trained for and the adversarial task. If the model maintains high performance on the original task while also achieving improved fairness on the adversarial task, it indicates that adversarial training has been effective in reducing bias in the model. Additionally, looking at metrics such as disparate impact and equalized odds difference before and after adversarial training can provide insights into the model’s bias reduction.

With the growing awareness of bias in machine learning models, evaluating the success of adversarial training is crucial in ensuring fair and ethical AI systems. By employing rigorous evaluation techniques and considering a range of metrics, researchers and developers can better understand the impact of adversarial training on bias reduction.

Common Pitfalls in Evaluating Adversarial Training

While adversarial training is a promising approach to reducing bias in machine learning models, there are common pitfalls to be aware of during evaluation. One such pitfall is overfitting to the adversarial examples used during training, which can lead to a model that performs well only on specific examples and fails to generalize to unseen data. Another pitfall is the trade-off between fairness and accuracy – increasing fairness through adversarial training may result in a decrease in overall model performance.

Learning to navigate these pitfalls and striking a balance between fairness and accuracy is vital when evaluating the effectiveness of adversarial training in mitigating bias in machine learning models.

Challenges and Limitations of Adversarial Training

Common Challenges in Implementing Adversarial Training

Adversarial training has gained popularity for its potential in mitigating biases in machine learning models. However, implementing adversarial training comes with its set of challenges. One common challenge is the selection of appropriate hyperparameters for training the adversarial networks. Tuning these hyperparameters can be a time-consuming process, requiring a deep understanding of the model architecture and data distribution.

Another challenge is the trade-off between model performance and computational resources. Adversarial training typically increases the training time and resource requirements due to the adversarial training loop. This can make it challenging to scale adversarial training to larger datasets or complex models.

Limitations of Adversarial Training in Preventing Bias

Adversarial training, while a promising approach, has limitations in fully preventing bias in machine learning models. It can address certain types of biases, such as those related to sensitive attributes like gender or race, but may not effectively capture more nuanced or latent biases present in the data. In some cases, adversarial training can even introduce new forms of bias or overlook existing biases, leading to unintended consequences.

It is important to recognize that adversarial training is not a one-size-fits-all solution for bias mitigation. It requires careful consideration and domain expertise to ensure that the adversarial approach is effectively addressing the specific biases present in the data and model.

Future Directions for Adversarial Training Research

Assuming the continued interest in adversarial training for bias mitigation, future research directions could focus on improving the interpretability of adversarial models. This includes developing techniques to explain how adversarial training impacts model decisions and which biases it effectively mitigates. Additionally, exploring ways to automate hyperparameter tuning and optimize computational efficiency in adversarial training could make it more accessible for a wider range of applications.

The evolving landscape of adversarial training research presents exciting opportunities to enhance the effectiveness and applicability of this approach in addressing bias in machine learning models. Collaborative efforts between researchers, practitioners, and policymakers will be crucial in advancing the field of adversarial training for bias mitigation.

Summing up

To sum up, adversarial training presents a promising approach to reducing bias in machine learning models by explicitly factoring in fairness constraints during the training process. By incorporating adversarial examples that aim to fool the model into making biased predictions, these techniques can help mitigate the impact of biases in the data. However, it is crucial to note that adversarial training is not a one-size-fits-all solution and requires careful consideration of the specific biases present in the dataset and the potential trade-offs it may introduce.

FAQ

Q: What is adversarial training in machine learning?

A: Adversarial training is a technique used in machine learning to improve the robustness and generalization of models by exposing them to adversarial examples during training.

Q: How does adversarial training work to prevent bias in machine learning models?

A: Adversarial training introduces small, carefully crafted perturbations to the input data to trick the model into making incorrect predictions. By training on these adversarial examples, the model learns to be more robust and less biased in its decision-making.

Q: Can adversarial training help reduce bias in machine learning models?

A: Yes, adversarial training can help reduce bias in machine learning models by encouraging them to make decisions based on the underlying patterns in the data rather than on superficial features that may lead to biased outcomes.

Q: Are there any limitations to using adversarial training to prevent bias in machine learning models?

A: While adversarial training can be effective in reducing bias, it may not completely eliminate bias in machine learning models. Additionally, adversarial training can be computationally expensive and may require additional resources to implement effectively.

Q: How can adversarial training be combined with other techniques to address bias in machine learning models?

A: Adversarial training can be combined with techniques such as data augmentation, fairness constraints, and bias detection algorithms to create more robust and less biased machine learning models. By using a combination of approaches, developers can better address bias in their models.