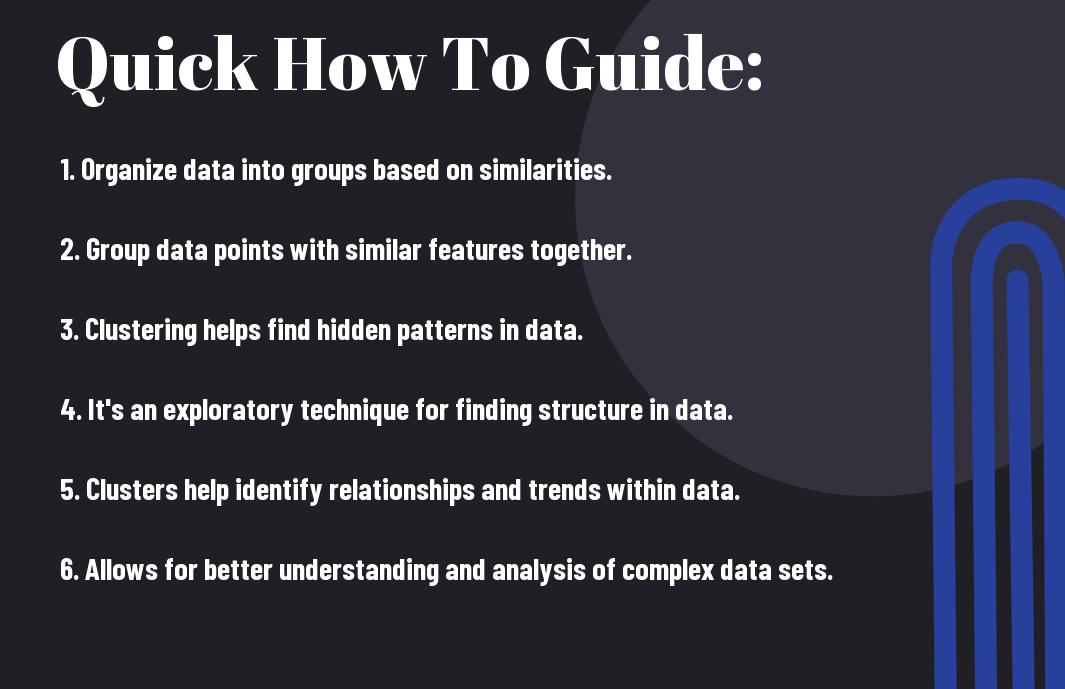

There’s a fundamental technique in unsupervised machine learning that plays a crucial role in organizing data – clustering. This method involves grouping similar data points together, allowing us to discover patterns and structures within unlabeled datasets. In this guide, we will explore the significance of clustering in unsupervised learning and how it helps us make sense of complex data without predefined categories or labels.

Key Takeaways:

- Organizing Similar Data Points: Clustering helps in grouping similar data points together based on shared characteristics without any predefined labels.

- Understanding Data Patterns: Clustering allows us to discover hidden patterns and structures within the data which can be useful for making informed decisions.

- Feature Selection and Dimensionality Reduction: Clustering can be used for feature selection and dimensionality reduction by grouping similar features together, simplifying the dataset for further analysis.

Understanding Clustering in Unsupervised Machine Learning

Definition and Purpose of Clustering

The process of clustering in unsupervised machine learning involves grouping a set of objects in such a way that objects in the same group (or cluster) are more similar to each other than to those in other groups. The primary purpose of clustering is to discover the inherent structure present in the data without the need for labeled outcomes. It is used to identify patterns, detect outliers, reduce dimensionality, and improve data understanding.

Types of Clustering: Hierarchical, K-Means, and DBSCAN

There are several types of clustering algorithms commonly used in unsupervised machine learning, including Hierarchical Clustering, K-Means Clustering, and DBSCAN (Density-Based Spatial Clustering of Applications with Noise). Hierarchical clustering creates a tree of clusters, where each node is a cluster consisting of the combined clusters of its children. K-Means clustering partitions the data into K clusters based on centroids, iteratively optimizing the positions of the centroids to minimize the within-cluster variance. DBSCAN identifies clusters as dense regions separated by sparser regions of data points.

| Hierarchical Clustering | Creates a tree of clusters |

| K-Means Clustering | Partitions data into K clusters based on centroids |

| DBSCAN | Identifies clusters as dense regions |

On a practical note, choosing the right clustering algorithm depends on the structure of the data and the goals of the analysis. Perceiving the differences between these clustering methods and their applications is crucial for successful data clustering.

How to Choose the Right Clustering Algorithm

Factors to Consider: Data Size, Dimensionality, and Noise

You have different algorithms to choose from when it comes to clustering, and selecting the right one can significantly impact the results. Factors such as the size of your data set, the dimensionality of your data, and the presence of noise play a crucial role in determining the most suitable clustering algorithm for your specific task.

- Consider the size of your data set: Some algorithms are more scalable and efficient with large data sets than others.

- Think about the dimensionality of your data: High-dimensional data may require algorithms that can handle this complexity effectively.

- Account for noise in your data: Certain algorithms are more robust to noise and outliers than others.

Perceiving the characteristics of your data in terms of size, dimensionality, and noise can help guide you in choosing the best clustering algorithm for your particular problem.

Tips for Selecting the Best Algorithm for Your Data

There’s an array of clustering algorithms available, each with its strengths and weaknesses. To select the most suitable algorithm for your data, consider the following tips:

- Understand the nature of your data: Different algorithms make different assumptions about the structure of the data, so pick one that aligns with your data characteristics.

- Experiment with multiple algorithms: It’s often helpful to try out several algorithms and compare their performance to see which one provides the best results for your specific dataset.

An in-depth understanding of your data and the specific requirements of your clustering task can guide you in the selection of the most appropriate algorithm. By exploring various algorithms and considering their suitability for your data, you can enhance the quality and relevance of your clustering results.

- Thou should pay attention to the interpretability of the results: Some algorithms may provide more interpretable clusters than others.

Preprocessing Data for Clustering

Handling Missing Values and Outliers

After collecting data for an unsupervised machine learning task like clustering, it is crucial to preprocess the data to ensure the quality and effectiveness of the clustering algorithm. An necessary step in preprocessing is handling missing values and outliers. Missing values can significantly impact the clustering process, as many algorithms do not know how to handle them. One common approach is to impute missing values by replacing them with the mean, median, or mode of the respective attribute.

Normalization and Feature Scaling Techniques

Normalization plays a vital role in preprocessing data for clustering tasks. It ensures that all the features contribute equally to the similarity measures and prevents attributes with large scales from dominating the clustering process. Techniques like Min-Max scaling, Z-score normalization, and Robust scaling are commonly used to normalize the data. Feature scaling techniques transform the data into a similar range, making it easier for clustering algorithms to identify meaningful patterns and clusters in the data.

With normalization and feature scaling techniques, it is necessary to consider the distribution and scale of the data attributes. For example, if the features have varying scales, applying Min-Max scaling can help in bringing all features to a uniform scale between 0 and 1. On the other hand, Z-score normalization can be more suitable when the data follows a Gaussian distribution.

Evaluating Clustering Performance

Your question about the role of clustering algorithms in unsupervised learning is crucial to understanding the importance of evaluating clustering performance. Evaluating clustering performance helps determine the effectiveness of the unsupervised machine learning model in grouping similar data points together.

Metrics for Measuring Clustering Quality: Silhouette Score, Calinski-Harabasz Index, and Davies-Bouldin Index

Measuring clustering quality involves using various metrics such as the Silhouette Score, Calinski-Harabasz Index, and Davies-Bouldin Index. These metrics help quantify how well the data points are clustered and the separation between clusters. The Silhouette Score measures how similar an object is to its cluster compared to other clusters, with values ranging from -1 to 1. The Calinski-Harabasz Index evaluates the ratio of the sum of between-cluster dispersion and the sum of within-cluster dispersion. The Davies-Bouldin Index calculates the average similarity between each cluster and its most similar cluster.

How to Interpret Clustering Evaluation Metrics

An crucial aspect of evaluating clustering performance is understanding how to interpret clustering evaluation metrics. By analyzing metrics like the Silhouette Score, Calinski-Harabasz Index, and Davies-Bouldin Index, data scientists can assess the cohesion within clusters and the separation between clusters. Higher Silhouette Scores, Calinski-Harabasz Index values, and lower Davies-Bouldin Index values indicate better clustering performance.

Plus, it’s crucial to compare these metrics across different clustering algorithms and parameter settings to determine the most suitable model for the dataset. By carefully interpreting these evaluation metrics, data scientists can optimize the clustering process and improve the overall performance of unsupervised machine learning models.

Common Applications of Clustering in Unsupervised Machine Learning

Customer Segmentation and Market Research

Despite the challenges, clustering is widely used in unsupervised machine learning for various applications. One of the common uses of clustering is in customer segmentation and market research. By applying clustering algorithms to customer data, businesses can group customers based on their purchasing behavior, demographic information, or other relevant features. This segmentation allows companies to tailor their marketing strategies, improve customer satisfaction, and enhance overall business performance.

Anomaly Detection and Fraud Identification

To detect unusual patterns or outliers in data, clustering plays a crucial role in anomaly detection and fraud identification. By clustering data points, a model can identify if there are any anomalies or deviations from the normal behavior of the dataset. This helps businesses in detecting fraudulent activities, network intrusions, or any other unusual occurrences that require immediate attention.

Customer behavior is unpredictable, leading to changing patterns in purchasing habits and interactions with businesses. By utilizing clustering techniques, companies can identify and address abnormal behavior effectively, safeguarding their operations.

Image Segmentation and Object Recognition

Even in the field of computer vision, clustering is extensively used for image segmentation and object recognition. Clustering algorithms can group pixels in an image based on their color, intensity, or spatial proximity, allowing for the segmentation of different objects or regions within the image. This segmentation is vital for various applications like medical imaging, autonomous driving, and image retrieval.

Image segmentation through clustering enables machines to recognize distinct objects within an image, helping in tasks like image classification, object detection, and scene understanding. This breakthrough in computer vision has revolutionized many industries, including healthcare, automotive, and security.

Overcoming Common Challenges in Clustering

Dealing with High-Dimensional Data and Curse of Dimensionality

An imperative challenge in clustering is dealing with high-dimensional data, which can lead to the curse of dimensionality. An increase in the number of dimensions in the data can result in increased computational complexity, sparsity of data, and difficulty in visualizing clusters. To address this challenge, dimensionality reduction techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) can be used to project the data into a lower-dimensional space while preserving important features for clustering algorithms.

Handling Imbalanced Data and Class Imbalance

Clustering algorithms are not immune to the challenges posed by imbalanced data and class imbalance. In scenarios where certain clusters are underrepresented, traditional clustering algorithms may struggle to accurately identify and separate these minority clusters. Techniques such as oversampling, undersampling, or using specialized clustering algorithms like DBSCAN which are robust to noise and outliers, can help in handling imbalanced data effectively.

Understanding the distribution of clusters in imbalanced data sets is critical to selecting the appropriate clustering algorithm and evaluating the performance of the clustering model accurately. It is important to choose an evaluation metric that considers the imbalance in the data, such as Adjusted Rand Index (ARI) or Fowlkes-Mallows Index, to assess the clustering quality effectively.

Summing up

To wrap up, clustering is a crucial technique in unsupervised machine learning that helps identify patterns and groups within a dataset without the need for labeled data. By grouping similar data points together based on certain features, clustering algorithms can provide valuable insights and help in making data-driven decisions. Whether it’s for customer segmentation, anomaly detection, or pattern recognition, clustering plays a vital role in uncovering hidden patterns and structures in data.

FAQ

Q: What is clustering in unsupervised machine learning?

A: Clustering is a technique in unsupervised machine learning where data points are grouped together based on their similarities.

Q: What role does clustering play in unsupervised machine learning?

A: Clustering plays a crucial role in unsupervised machine learning as it helps in identifying inherent patterns and structures within the data without the need for predefined labels.

Q: What are the common types of clustering algorithms used in unsupervised machine learning?

A: Some common types of clustering algorithms used in unsupervised machine learning are K-means clustering, Hierarchical clustering, DBSCAN, and Gaussian Mixture Models.

Q: How does clustering help in data analysis and exploration?

A: Clustering helps in data analysis and exploration by grouping similar data points together, which can provide insights into patterns, relationships, and anomalies within the data.

Q: Can clustering be used for dimensionality reduction in unsupervised machine learning?

A: Yes, clustering can be used for dimensionality reduction in unsupervised machine learning by grouping similar data points together and representing them with a single cluster centroid, reducing the complexity of the data.

I really like reading through a post that can make men and women think. Also, thank you for allowing me to comment!

smm продвижение