This blog post investigates into the fascinating world of machine learning and Bayesian inference, exploring the potential of incorporating the latter to enhance predictive accuracy. By understanding how Bayesian methods can capture uncertainty and update beliefs in a systematic way, you can discover how they can complement traditional machine learning techniques and lead to more reliable and precise predictions. Whether you’re a seasoned data scientist or a curious beginner, this guide will shed light on the advantages of integrating Bayesian inference into your machine learning toolkit.

Key Takeaways:

- Bayesian inference can improve prediction accuracy: By incorporating prior knowledge and uncertainty into the model, Bayesian inference can provide more reliable and accurate predictions.

- Bayesian models can handle small data sets effectively: Bayesian methods are well-suited for situations where data is limited, as they use prior information to make more robust inferences.

- Bayesian inference can quantify uncertainty: Unlike traditional machine learning models, Bayesian inference can provide estimates of uncertainty for each prediction, offering valuable insights for decision-making.

Understanding Bayesian Inference

What is Bayesian Inference?

To understand Bayesian inference, it is imperative to grasp the concept of probability in a different light. Traditional statistics typically deals with fixed parameters and unchanging variables. In contrast, Bayesian inference views probabilities as measures of belief or uncertainty about events, which can change as new data is obtained. It allows us to update our beliefs about parameters in a model as more data becomes available, resulting in more accurate and nuanced predictions.

How Bayesian Inference Works

While traditional frequentist methods focus on estimating fixed parameters from data, Bayesian inference incorporates prior beliefs about the parameters to generate a posterior distribution that combines these prior beliefs with the likelihood of the data given the parameters. This posterior distribution represents the updated belief about the parameters after observing the data. By integrating prior knowledge with new data in a coherent probabilistic framework, Bayesian inference provides a flexible and powerful approach to statistical modeling.

With Bayesian inference, the key step is defining the prior distribution, which represents our beliefs about the parameters before observing any data. The likelihood function quantifies how well the data support different values of the parameters, and the posterior distribution combines the prior and likelihood to reflect updated beliefs after data collection. Markov Chain Monte Carlo (MCMC) methods are often used to sample from the posterior distribution and obtain estimates of the parameters.

Key Concepts in Bayesian Inference

Inference in Bayesian statistics involves not just point estimates of parameters but entire distributions that convey uncertainty about the parameter values. This allows for a more comprehensive understanding of the variability and uncertainty in the model estimates. Additionally, Bayesian inference allows for the incorporation of domain knowledge through the choice of prior distributions, enabling the integration of expert opinions or existing information into the analysis.

Bayesian statistics provides a coherent framework for updating beliefs in the face of uncertainty, making it a valuable tool for improving machine learning accuracy. By taking a probabilistic approach to modeling and inference, Bayesian methods offer a principled way to incorporate prior knowledge, quantify uncertainty, and make more informed predictions based on available data.

The Role of Bayesian Inference in Machine Learning

How Bayesian Inference Can Improve Model Uncertainty

Now, Bayesian inference plays a crucial role in improving model uncertainty in machine learning. Traditional machine learning models often provide point estimates without accounting for uncertainty. However, Bayesian techniques allow us to quantify and propagate uncertainty throughout the model, providing a more comprehensive understanding of the prediction’s reliability.

The Importance of Prior Distributions in Machine Learning

Some of the most critical aspects of Bayesian inference in machine learning are the prior distributions. Priors encode our beliefs about the parameters before observing the data, influencing the posterior distribution. By incorporating domain knowledge into the modeling process through prior distributions, we can improve the model’s performance and generalization.

Machine learning models trained using Bayesian inference leverage prior knowledge in a formal and consistent way, leading to more robust and interpretable results. Prior distributions act as regularization, preventing overfitting and enhancing the model’s ability to generalize well to unseen data.

Bayesian Inference and Model Selection

Model selection is a fundamental task in machine learning, and Bayesian inference provides a principled framework for this process. By comparing the marginal likelihood of different models, Bayesian inference allows us to not only select the best-performing model but also quantify the uncertainty in this selection process. This is crucial in scenarios where multiple models have similar performance metrics.

Model selection with Bayesian inference helps in avoiding overfitting by penalizing complex models with high uncertainty, leading to more reliable and stable model selection decisions. Additionally, Bayesian model averaging, which considers multiple models simultaneously, can further enhance the predictive performance and robustness of the chosen model.

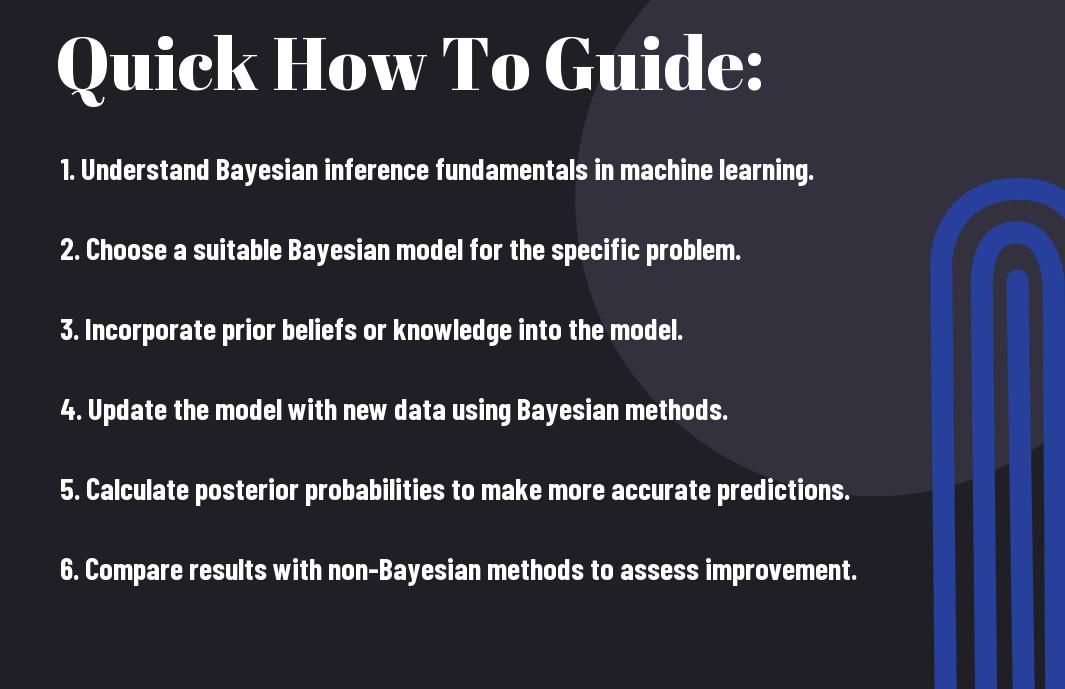

How to Apply Bayesian Inference in Machine Learning

Not all machine learning techniques utilize Bayesian inference, but those that do can benefit from its ability to incorporate prior knowledge into the modeling process. In order to effectively apply Bayesian inference in machine learning, there are several key considerations to keep in mind.

Tips for Choosing the Right Prior Distribution

- Consider the impact of the prior on the posterior distribution.

- Choose a prior that is reflective of your domain knowledge and is non-informative if little prior knowledge is available.

Recognizing the importance of selecting the appropriate prior distribution is crucial in ensuring the accuracy and reliability of your Bayesian machine learning model.

Factors to Consider When Selecting a Bayesian Model

- Understand the complexity of the problem and choose a model that can appropriately capture this complexity.

Clearly defining the factors that influence the selection of a Bayesian model, such as the amount of data available and the desired level of interpretability, will help guide the modeling process effectively.

- This ensures that the selected model aligns with the goals of the analysis and is well-suited to the available data.

How to Implement Bayesian Inference in Popular Machine Learning Libraries

Now, let’s probe into how Bayesian inference can be implemented in popular machine learning libraries such as TensorFlow and PyTorch. By incorporating Bayesian methods into these libraries, users can take advantage of the powerful modeling capabilities offered by these tools.

Popular machine learning libraries like TensorFlow and PyTorch provide users with the flexibility to build custom Bayesian models and leverage advanced probabilistic programming techniques. By implementing Bayesian inference in these libraries, users can tap into the benefits of Bayesian modeling while utilizing the rich functionality and scalability of these popular tools in the machine learning community.

Benefits of Bayesian Inference in Machine Learning

Improved Model Accuracy and Robustness

Unlike traditional machine learning techniques that rely on point estimates, Bayesian inference provides a framework for quantifying uncertainty in the model parameters. This leads to models that are not only accurate but also robust in the face of varying data inputs. By incorporating prior knowledge and updating beliefs based on new evidence, Bayesian models can adapt and generalize better to unseen data.

Enhanced Model Interpretability

Little is understood not only about the predictions made by machine learning models but also about why they make those predictions. Bayesian inference offers a transparent way to interpret model decisions by providing not just a single prediction, but a distribution of potential outcomes. This allows users to understand the uncertainty associated with each prediction and make more informed decisions based on the model’s confidence levels.

The Bayesian approach provides a rich set of tools, such as posterior distributions and credible intervals, that enable users to explore the model’s behavior and make sense of complex relationships within the data.

Better Handling of Outliers and Noisy Data

Understanding the potential impact of outliers and noisy data is crucial in building robust machine learning models. Bayesian inference approaches offer benefits in this area by capturing the uncertainty in the data and providing a more nuanced understanding of the underlying patterns. By modeling data distributions rather than point estimates, Bayesian models can better handle outliers and noisy data points, leading to more reliable and accurate predictions.

Benefits of Bayesian methods include the ability to handle extreme data points more effectively, as well as the capacity to adjust model assumptions based on the data at hand, making them more adaptable to real-world scenarios.

Common Challenges and Limitations of Bayesian Inference

Computational Complexity and Scalability Issues

Despite the many advantages of Bayesian inference, such as its ability to incorporate prior knowledge and uncertainty, it is not without its challenges. One of the main limitations of Bayesian methods is the computational complexity and scalability issues they often face.

The Curse of Dimensionality and High-Dimensional Data

With the increasing availability of high-dimensional data in various fields, Bayesian inference methods can struggle with what is known as the curse of dimensionality. This phenomenon refers to the exponential increase in data volume as the number of dimensions or features in the data increases, leading to sparsity and computational inefficiency.

Scalability becomes a major concern for Bayesian inference in high-dimensional settings, as the computational resources required grow exponentially with the number of dimensions. This can result in impractical computational costs and severely limit the applicability of Bayesian methods in real-world scenarios.

Sensitivity to Prior Distributions and Hyperparameters

Scalability issues are further compounded by the sensitivity of Bayesian inference to the choice of prior distributions and hyperparameters. The prior distributions represent the beliefs or assumptions about the data before observing the evidence, and the hyperparameters control the behavior of these priors.

Another challenge is the need to specify informative priors that accurately reflect prior knowledge, which can be particularly difficult in complex models or when limited domain expertise is available. Moreover, the impact of hyperparameter tuning on the final results can be non-trivial, requiring careful selection and tuning to achieve optimal model performance.

Best Practices for Bayesian Inference in Machine Learning

Once again, for a detailed comparison of statistical inference and machine learning inference, you can refer to Statistical inference vs Machine Learning inference. When implementing Bayesian inference in machine learning models, there are several best practices to consider to ensure accurate and reliable results.

How to Evaluate the Performance of Bayesian Models

Any evaluation of Bayesian models should include metrics such as accuracy, precision, recall, and F1-score to assess the model’s performance. Additionally, techniques like cross-validation and information criteria can be used to compare different Bayesian models and select the best one for a particular dataset.

Tips for Avoiding Overfitting and Underfitting

- Evaluate the model on both training and validation datasets to ensure it generalizes well to unseen data.

- Regularize the model by introducing priors or using techniques like dropout to prevent overfitting.

With regards to avoiding overfitting and underfitting, it’s crucial to strike a balance between model complexity and generalization. Perceiving the model’s behavior on both training and validation data sets is imperative for achieving optimal performance.

Strategies for Hyperparameter Tuning and Model Selection

Strategies for hyperparameter tuning and model selection include carefully selecting hyperparameters based on domain knowledge and using techniques like grid search or Bayesian optimization to find the best hyperparameters for the model. Plus, considering model selection techniques like cross-validation or information criteria can help in choosing the most appropriate model for the given data.

Final Words

With this in mind, it is evident that utilizing Bayesian inference techniques can indeed improve machine learning accuracy. By incorporating prior knowledge and updating beliefs based on new evidence, Bayesian methods can provide more robust models that are better equipped to handle uncertainty and reduce overfitting. The ability to quantify uncertainty in predictions also provides valuable insights for decision-making and risk management.

Overall, implementing Bayesian inference in machine learning can lead to more reliable and interpretable models, making it a valuable tool for various applications in fields such as healthcare, finance, and natural language processing. As advancements in computational capabilities continue to expand, the integration of Bayesian methods with machine learning algorithms is likely to further enhance prediction accuracy and foster innovation in data-driven decision-making processes.

FAQ

Q: What is Bayesian Inference?

A: Bayesian Inference is a statistical method used to update the probability of a hypothesis as more evidence or information becomes available.

Q: How can Bayesian Inference improve machine learning accuracy?

A: Bayesian Inference can improve machine learning accuracy by providing a framework for incorporating prior knowledge or beliefs about the data into the model, leading to more robust predictions.

Q: What are the advantages of using Bayesian Inference in machine learning?

A: Some advantages of using Bayesian Inference in machine learning include handling uncertainty in data, providing principled ways to make decisions, and enabling updating of models with new data.

Q: Are there any limitations to using Bayesian Inference in machine learning?

A: Some limitations of using Bayesian Inference in machine learning include computational complexity, the need for specifying prior distributions, and potential difficulties in scaling to large datasets.

Q: How can one get started with implementing Bayesian Inference in machine learning projects?

A: To get started with implementing Bayesian Inference in machine learning projects, one can use libraries such as PyMC3, Stan, or Edward, which provide user-friendly interfaces for building Bayesian models in Python and other programming languages.